Quick Start with a dummy cluster

Let’s attempt to setup the Tergite stack to run on a dummy cluster on your local machine.

We will not need an actual quantum computer. Take note, however, that the dummy cluster only returns 0 in its results.

Prerequisites

You may have to install these software if you don’t have them already installed.

Setup the Frontend

- Ensure you have docker is running.

docker --helpNote: for MacOS, start docker by running this command

open -a DockerNote: for Windows, start docker by running this command

Start-Process "C:\Program Files\Docker\Docker\Docker Desktop.exe"Note: for Linux, start docker by running this command

sudo systemctl start dockerOpen another terminal

Clone the tergite-frontend repo

git clone https://github.com/tergite/tergite-frontend.git- Enter the tergite-frontend folder

cd tergite-frontend- Create an

mss-config.tomlfile with visual studio code (or any other text editor).

code mss-config.toml- Update the

mss-config.tomlwith the following content

# mss-config.toml

# general configurations

[general]

# the port on which MSS is running

mss_port = 8002

# the port on which the websocket is running

ws_port = 6532

# environment reflect which environment the app is to run in.

environment = "development"

# the host the uvicorn runs on.

# During testing auth on 127.0.0.1, set this to "127.0.0.1". default: "0.0.0.0"

mss_host = "127.0.0.1"

[database]

# configurations for the database

name = "testing"

# database URI

# host.docker.internal resolves to the host's 127.0.0.1

# see https://stackoverflow.com/questions/31324981/how-to-access-host-port-from-docker-container#answer-43541732

url = "mongodb://host.docker.internal:27017"

[[backends]]

name = "loke"

# the URL where this backend is running

# host.docker.internal resolves to the host's 127.0.0.1

# see https://stackoverflow.com/questions/31324981/how-to-access-host-port-from-docker-container#answer-43541732

url = "http://host.docker.internal:8000"

[auth]

# turn auth OFF or ON, default=true

is_enabled = false

cookie_domain = "127.0.0.1"

cookie_name = "tergiteauth"

[[auth.clients]]

name = "github"

client_id = "some-github-obtained-client-id"

client_secret = "some-github-obtained-client-secret"

redirect_url = "http://127.0.0.1:8002/auth/github/callback"

client_type = "github"

email_regex = "^(john\\.doe|jane|aggrey)@example\\.com$"

email_domain = "example.com"

roles = ["admin", "user"]

[[auth.clients]]

name = "puhuri"

client_id = "some-puhuri-obtained-client-id"

client_secret = "some-puhuri-obtained-client-secret"

redirect_url = "http://127.0.0.1:8002/auth/puhuri/callback"

client_type = "openid"

email_regex = "^(john\\.doe|jane)@example\\.com$"

email_domain = "example.com"

roles = ["user"]

openid_configuration_endpoint = "https://proxy.acc.puhuri.eduteams.org/.well-known/openid-configuration"

# Puhuri synchronization

# Puhuri is a resource management platform for HPC systems, that is also to be used for Quantum Computer's

[puhuri]

# turn puhuri synchronization OFF or ON, default=true

is_enabled = false- Create a

.envfile with visual studio code (or any other text editor).

code .env- Update the

.envwith the following content

# .env

MSS_PORT=8002

# required

ENVIRONMENT="development"

MSS_URL="http://127.0.0.1:8002"

GRAFANA_LOKI_URL=http://127.0.0.1:3100/loki/api/v1/push

LOKI_LOGGER_ID=some-generic-id

# docker LOGGING_DRIVER can be journald, json-file, local etc.

LOGGING_DRIVER=json-file

# image versions:

# Note: If you ever want the images to be rebuilt,

# you have to change the app version numbers here

# before running "docker compose up"

MSS_VERSION=v0.0.1

DASHBOARD_VERSION=v0.0.1

PROMTAIL_VERSION=2.8.3- For Linux: open MongoDB configurations file

code /etc/mongod.conf- For Linux: Replace the contents that config file with the following:

# mongod.conf

# for documentation of all options, see:

# http://docs.mongodb.org/manual/reference/configuration-options/

# Where and how to store data.

storage:

dbPath: /var/lib/mongodb

# engine:

# wiredTiger:

# where to write logging data.

systemLog:

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log

# network interfaces

net:

port: 27017

bindIp: 0.0.0.0

# how the process runs

processManagement:

timeZoneInfo: /usr/share/zoneinfo

#security:

#operationProfiling:

#replication:

#sharding:

## Enterprise-Only Options:

#auditLog:- For Linux: restart mongod service and make sure that it’s active

sudo service mongod restart

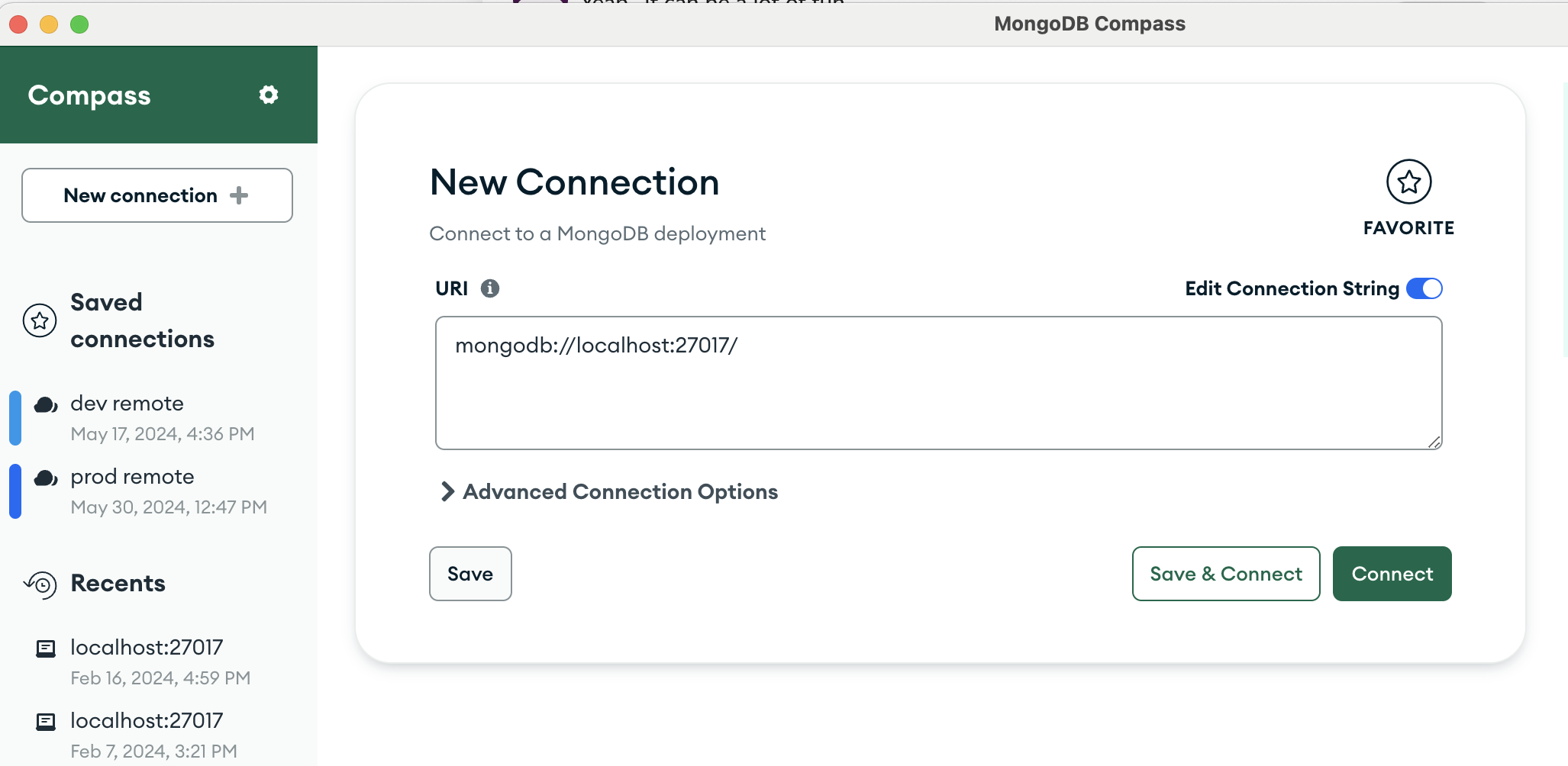

sudo service mongod status- Open the Mongo compass application and connect to the default local mongo database

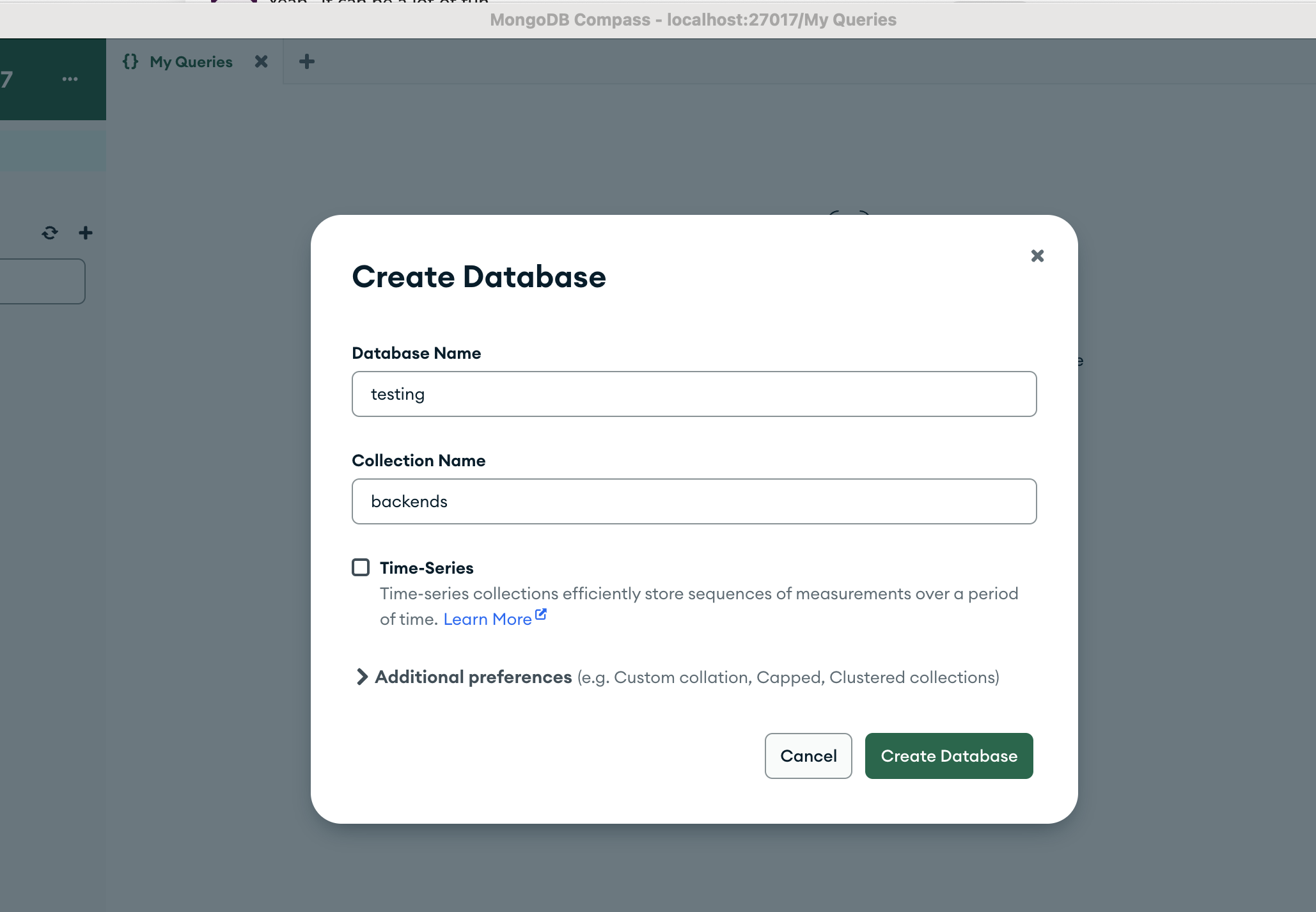

- Create a new mongo database called “testing” that contains a “backends” collection.

- Delete the old docker images of “tergite/tergite-mss”, “tergite/tergite-dashboard” from docker if they exist.

docker rmi -f tergite/tergite-mss:v0.0.1

docker rmi -f tergite/tergite-dashboard:v0.0.1- To Run the services, use the

fresh-docker-compose.yml.

docker compose -f fresh-docker-compose.yml up -d- Remove any stale artefacts created during the docker build

docker system pruneOpen your browser at

- http://localhost:8002 to see the MSS service

- http://localhost:3000 to see the Dashboard application

To view the status of the services, run:

docker compose -f fresh-docker-compose.yml ps- To stop the services, run:

docker compose -f fresh-docker-compose.yml stop- To remove stop the services and remove their containers also, run:

docker compose -f fresh-docker-compose.yml down- To view logs of the docker containers to catch some errors, use:

docker compose -f fresh-docker-compose.yml logs -fsee more at https://docs.docker.com/reference/cli/docker/compose/logs/

- Ensure that the services are running. If they are not, restart them.

docker compose -f fresh-docker-compose.yml up -dSetup the Backend

- Ensure you have conda installed. (You could simply have python +3.12 installed instead.)

- Ensure you have the Redis server running.

redis-server- Open terminal.

- Clone the tergite-backend repo

git clone https://github.com/tergite/tergite-backend.git- Create conda environment

conda create -n bcc -y python=3.12

conda activate bcc- Install dependencies

cd tergite-backend

pip install -r requirements.txt- Create an

.envfile with visual studio code (or any other text editor).

code .env- Update

.envfile to have the following content

# .env

APP_SETTINGS=development

IS_AUTH_ENABLED=False

DEFAULT_PREFIX=loke

STORAGE_ROOT=/tmp

LOGFILE_DOWNLOAD_POOL_DIRNAME=logfile_download_pool

LOGFILE_UPLOAD_POOL_DIRNAME=logfile_upload_pool

JOB_UPLOAD_POOL_DIRNAME=job_upload_pool

JOB_PRE_PROC_POOL_DIRNAME=job_preproc_pool

JOB_EXECUTION_POOL_DIRNAME=job_execution_pool

# Main Service Server

MSS_MACHINE_ROOT_URL=http://localhost:8002

MSS_PORT=8002

# Backend Control computer

BCC_MACHINE_ROOT_URL=http://localhost:8000

BCC_PORT=8000

EXECUTOR_TYPE=quantify

LOG_LEVEL=INFO

DEBUG=false

UVICORN_LOG_LEVEL=info- Create an

quantify-config.jsonfile with visual studio code (or any other text editor).

code quantify-config.json- Update the

quantify-config.jsonwith the following content

{

"config_type": "quantify_scheduler.backends.qblox_backend.QbloxHardwareCompilationConfig",

"hardware_description": {

"cluster0": {

"instrument_type": "Cluster",

"ref": "internal",

"modules": {

"2": { "instrument_type": "QCM_RF" },

"3": { "instrument_type": "QCM_RF" },

"16": { "instrument_type": "QRM_RF" }

}

}

},

"hardware_options": {

"modulation_frequencies": {

"q00:mw-q00.01": { "lo_freq": 4.80e9 },

"q01:mw-q01.01": { "lo_freq": 4.23e9 },

"q00_q01:fl-q00_q01.cz": { "lo_freq": 3.90e9 },

"q00:res-q00.ro": { "lo_freq": 6.80e9 },

"q01:res-q01.ro": { "lo_freq": 6.80e9 }

}

},

"connectivity": {

"graph": [

["cluster0.module2.complex_output_0", "q00:mw"],

["cluster0.module2.complex_output_1", "q01:mw"],

["cluster0.module3.complex_output_0", "q00_q01:fl"],

["cluster0.module16.complex_output_0", "q00:res"],

["cluster0.module16.complex_output_0", "q01:res"]

]

}

}

- Create an

quantify-metadata.ymlfile with visual studio code (or any other text editor).

code quantify-metadata.yml- Update the

quantify-metadata.ymlwith the following content

# Cluster used by the instrument coordinator

cluster0:

instrument_type: Cluster

ip_address: 192.168.78.101

is_dummy: True

modules:

"2": { instrument_type: QCM_RF }

"3": { instrument_type: QCM_RF }

"16": { instrument_type: QRM_RF }

# SPI rack for DC bias on the coupler

spi_rack:

instrument_type: SPI-Rack

port: "/dev/ttyACM0"

is_dummy: True

# Keys MUST be canonical coupler IDs you use in code (e.g., 'u0')

coupler_spi_mapping:

u0:

spi_module_number: 6

dac_name: "dac0"- Create a

backend_config.tomlfile with visual studio code (or any other text editor).

code backend_config.toml- Update the

backend_config.tomlwith the following content.

# backend_config.toml

[general_config]

name = "loke"

is_active = true

characterized = true

open_pulse = true

simulator = false

version = "1.0.0"

online_date = "2024-10-09T00:00:00"

num_qubits = 2

num_couplers = 1

num_resonators = 2

dt = 1e-9

dtm = 1e-9

[device_config]

discriminators = [ "lda" ]

qubit_ids = [ "q0", "q1" ]

meas_map = [ [ 0 ], [ 1 ] ]

coordinates = [

[0, 0],

[1, 0]

]

qubit_parameters = [

"id",

"x_position",

"y_position",

"xy_drive_line",

"z_drive_line",

"frequency",

"pi_pulse_amplitude",

"pi_pulse_duration",

"pulse_type",

"pulse_sigma",

"t1_decoherence",

"t2_decoherence"

]

resonator_parameters = [

"id",

"x_position",

"y_position",

"readout_line",

"acq_delay",

"acq_integration_time",

"frequency",

"pulse_delay",

"pulse_duration",

"pulse_type",

"pulse_amplitude"

]

coupler_parameters = [

"id",

"frequency",

"frequency_detuning",

"anharmonicity",

"coupling_strength_02",

"coupling_strength_12",

"cz_pulse_amplitude",

"cz_pulse_dc_bias",

"cz_pulse_phase_offset",

"cz_pulse_duration_before",

"cz_pulse_duration_rise",

"cz_pulse_duration_constant",

"control_rz_lambda",

"target_rz_lambda",

"pulse_type"

]

[device_config.discriminator_parameters]

lda = [

"coef_0",

"coef_1",

"intercept"

]

# Single coupler between q0 and q1

[device_config.coupling_dict]

u0 = ["q0", "q1"]

[gates.x]

coupling_map = [ [ 0, 1], [1, 0] ]

qasm_def = "gate x q { U(pi, 0, pi) q; }"

parameters = [ ]- Create a

calibration.seed.tomlfile with visual studio code (or any other text editor).

code calibration.seed.toml- Update the

calibration.seed.tomlfile with the following content.

[calibration_config]

[calibration_config.units.qubit]

frequency = "Hz"

t1_decoherence = "s"

t2_decoherence = "s"

anharmonicity = "Hz"

[calibration_config.units.readout_resonator]

acq_delay = "s"

acq_integration_time = "s"

frequency = "Hz"

pulse_delay = "s"

pulse_duration = "s"

pulse_amplitude = ""

pulse_type = ""

[calibration_config.units.coupler]

frequency = "Hz"

frequency_detuning = "Hz"

anharmonicity = "Hz"

coupling_strength_02 = "Hz"

coupling_strength_12 = "Hz"

cz_pulse_amplitude = ""

cz_pulse_dc_bias = ""

cz_pulse_phase_offset = "rad"

cz_pulse_duration_before = "s"

cz_pulse_duration_rise = "s"

cz_pulse_duration_constant = "s"

control_rz_lambda = "rad"

target_rz_lambda = "rad"

pulse_type = ""

# -- Qubits --

[[calibration_config.qubit]]

id = "q0"

frequency = 4.80e9

anharmonicity = -0.17e9

t1_decoherence = 30e-6

t2_decoherence = 28e-6

pi_pulse_amplitude = 0.03

pi_pulse_duration = 56e-9

pulse_sigma = 7e-9

pulse_type = "Gaussian"

[[calibration_config.qubit]]

id = "q1"

frequency = 4.23e9

anharmonicity = -0.17e9

t1_decoherence = 30e-6

t2_decoherence = 28e-6

pi_pulse_amplitude = 0.03

pi_pulse_duration = 56e-9

pulse_sigma = 7e-9

pulse_type = "Gaussian"

# -- Resonators --

[[calibration_config.readout_resonator]]

id = "q0"

acq_delay = 0

acq_integration_time = 1e-6

frequency = 6.80e9

pulse_delay = 0

pulse_duration = 1e-6

pulse_amplitude = 0.2

pulse_type = "Square"

[[calibration_config.readout_resonator]]

id = "q1"

acq_delay = 0

acq_integration_time = 1e-6

frequency = 6.80e9

pulse_delay = 0

pulse_duration = 1e-6

pulse_amplitude = 0.2

pulse_type = "Square"

# -- Couplers --

[[calibration_config.coupler]]

id = "u0"

frequency = 3.90e9

frequency_detuning = -15e6

anharmonicity = -0.17e9

coupling_strength_02 = 70e6

coupling_strength_12 = 70e6

cz_pulse_amplitude = 0.08

cz_pulse_dc_bias = 0.002

cz_pulse_phase_offset = 0.0

cz_pulse_duration_before = 80e-9

cz_pulse_duration_rise = 25e-9

cz_pulse_duration_constant = 350e-9

control_rz_lambda = 0.3

target_rz_lambda = 0.6

pulse_type = "wacqt_cz"

# -- Discriminators --

[calibration_config.discriminators.lda.q0]

intercept = -38.4344477840827

coef_0 = -98953.87504155144

coef_1 = -114154.48696231026- Run start script

./start_bcc.sh- Open your browser at http://localhost:8000/docs to see the interactive API docs

Run an Experiment

Open another terminal

Create a new folder “tergite-test” and enter it

mkdir tergite-test

cd tergite-test- Create conda environment and activate it

conda create -n tergite -y python=3.12

conda activate tergite- Install qiskit and Tergite SDK by running the command below:

pip install qiskit

pip install tergite- Create a file

main.pywith visual studio code (or any other text editor).

code main.py- Update the

main.pyfile with the following content:

# main.py

"""A sample script doing a very simple quantum operation"""

import time

import qiskit.circuit as circuit

import qiskit.compiler as compiler

from tergite import Job, Tergite

if __name__ == "__main__":

# the Tergite API URL

API_URL = "http://localhost:8002"

# The name of the Quantum Computer to use from the available quantum computers

BACKEND_NAME = "loke"

# the name of this service. For your own bookkeeping.

SERVICE_NAME = "local"

# the timeout in seconds for how long to keep checking for results

POLL_TIMEOUT = 100

# create the Qiskit circuit

qc = circuit.QuantumCircuit(2, 2)

qc.h(0)

qc.cx(0, 1)

qc.measure(0, 0)

qc.measure(1, 1)

# create a provider

# provider account creation can be skipped in case you already saved

# your provider account to the `~/.qiskit/tergiterc` file.

# See below how that is done.

provider = Tergite.use_provider_account(service_name=SERVICE_NAME, url=API_URL)

# to save this account to the `~/.qiskit/tergiterc` file, add the `save=True`

# provider = Tergite.use_provider_account(service_name=SERVICE_NAME, url=API_URL, save=True)

# Get the Tergite backend in case you skipped provider account creation

# provider = Tergite.get_provider(service_name=SERVICE_NAME)

backend = provider.get_backend(BACKEND_NAME)

backend.set_options(shots=1024)

# compile the circuit

tc = compiler.transpile(qc, backend=backend)

# run the circuit

job: Job = backend.run(tc, meas_level=2, meas_return="single")

job.wait_for_final_state(timeout=POLL_TIMEOUT)

# view the results

result = job.result()

print(result.get_counts())- Execute the above script by running the command below.

python main.py- It should return something like:

Results OK

{'00': 1024}Note: We get only 0’s because we are using the dummy cluster from quantify scheduler